At 麻豆社 Research & Development, we are researching how video quality could be enhanced using artificial intelligence (AI) and, in particular, how video can be automatically colourised using recent breakthroughs in machine learning (ML). Colourisation is of interest to broadcasters for restoring historical archived footage to make it more appealing and relatable to modern audiences.

We have now expanded our previous work on automatic colourisation by proposing the involvement of human interaction in the colourisation process, i.e. the workflow in which the user can select the colours. In particular, we are researching how to colourise black & white images using other colour images with similar content to guide the colourisation process.

- Read more about this work in our preprint conference paper (M. G. Blanch, I. Khalifeh, N. O'Connor, M. Mrak. '', in Proc. of MMSP 2021)

Although our previous work achieved promising results, mapping colours from a greyscale input is still a complex and ambiguous task. For example, a car can be red, blue, or an infinite array of colours, and the algorithm will select the most probable colour, which may not necessarily meet what the user expected to see.

That鈥檚 why we decided to research more conservative solutions and provide colour references to guide the system towards more accurate colour predictions. The images in the squares below show examples of greyscale images in one half and the auto-colourised version in the other. The colour example images within the circles influence the colourisation process giving (in this case) two different looks to the same fox image and two different looks to the same landscape.

Colourisation by example (also called exemplar-based colourisation) improves automatic methods and gives the user or producer more flexibility to achieve the style they desire. However, colourisation systems can be either highly sensitive to the selection of references (such as the need for similar content, position and size of related objects) or extremely complex and time-consuming.

For instance, before starting the colourisation process, most exemplar-based approaches require a style transfer or similar method to compute correspondences between the greyscale image and the colour reference. This method of transferring over the types of colours used in a similar type of image usually increases the system complexity.

We propose a novel exemplar-based neural network, called XCNET, that achieves fast and high-quality colour predictions. Previous methods needed a two-step process, first transferring the style, then the colourisation, but our approach does both of these at the same time - simplifying the process and achieving faster predictions.

- This open-source software is now available via the

Our approach

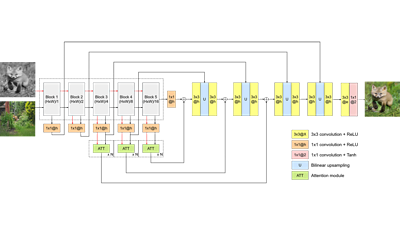

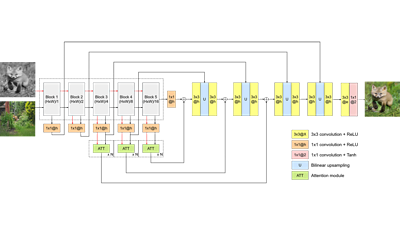

Going deeper, XCNET is composed of three different branches, as shown in the image below. The first branch takes the black & white target (T) and colour reference (R) and outputs volumes of features. This branch uses an image classification neural network trained to analyse complex patterns and generate features that help to identify what an image is comprised of. For example, to classify an image of a dog or cat, the neural network examines lines, shapes and complex structures like the position of the eyes or the skin colour. We use those features to represent the content and style of our target and reference images.

The second branch integrates attention modules, such as vision transformers, to fuse information from both sources of features. The attention mechanism will identify the most similar patterns between the target and reference and decide how much a reference feature contributes to the colourisation of each target position. Using this process, our network can learn how to perform style transfer automatically during the colourisation process.

Finally, the combined features are transformed into actual colours using the third branch (pyramid decoder). The name pyramid comes from the concept of decoding colourised outputs at different resolutions (0.125x, 0.25x, 0.5x of the original input size). Multi-resolution predictions help the network gain precision with objects of different sizes and encourage more realistic results.

Ultimately, we can apply the current method to restore black and white videos. To do this, we can use a colour reference to colourise each of the video frames independently and posteriorly correct any temporal inconstancies in the resulting images as shown here.

This work was carried out within the in collaboration with and the .

- - Share on Facebook

- 麻豆社 R&D - Visual Data Analytics

- 麻豆社 R&D - Video Coding

- 麻豆社 R&D - COGNITUS

- 麻豆社 R&D - Faster Video Compression Using Machine Learning

- 麻豆社 R&D - AI & Auto Colourisation - Black & White to Colour with Machine Learning

- 麻豆社 R&D - Capturing User Generated Content on 麻豆社 Music Day with COGNITUS

- 麻豆社 R&D - Testing AV1 and VVC

- 麻豆社 R&D - Turing codec: open-source HEVC video compression

- 麻豆社 R&D - Comparing MPEG and AOMedia

- 麻豆社 R&D - Joining the Alliance for Open Media